“Fortune 500 companies spent $7.8 billion ($16 million each) preparing for the roll-out of GDPR and typically spend over $10 million per year (each) to stay in compliance. Meanwhile, small to medium businesses saw their average revenue drop by 2.2% and their profit drop by 8.1% after GDPR took effect. What’s coming to the U.S. will have an even bigger impact.”

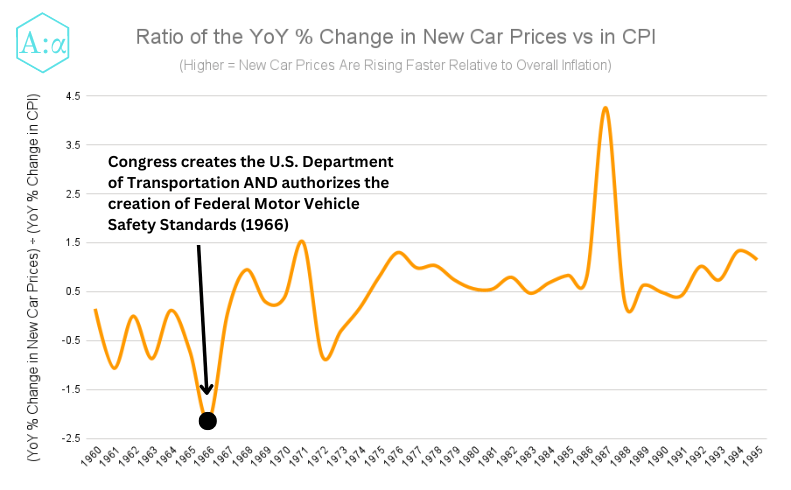

The first Ford Model T car was manufactured in 1908. But the U.S. Department of Transportation and the Federal Motor Vehicle Safety Standards (FMVSS) weren’t created until 58 years later in 1966. Several consequences followed within the next few years:

- Reduced competition. A number of foreign cars disappeared from the American market as soon as the new safety rules went into effect.

- Permanently higher inflation. The inflation rate of new car prices (relative to overall inflation) shifted higher (permanently). That reflected higher compliance costs as well as reduced competition from foreign car companies and startups.

- Happy insurance companies. Auto insurance companies benefited from reduced claims expenses on post-safety-regulation cars.

- New startups. A number of startups were formed to help big auto companies comply with the new safety standards. For example, Humanoid Systems (which after a series of mergers and acquisitions is now part of Humanetics) was founded in 1973 and won large contracts with GM and Ford to sell crash test dummies for $20,000 each. That would be $137,000 in 2023 dollars.

- Future-looking companies won big. Companies which were already producing auto safety equipment (such as Autoliv which had been pioneering seat belt technology since 1956) had their growth supercharged by the introduction of regulations that required auto makers to buy the type of products they were already selling.

In other words, automobile regulation took decades to come, but when it did, it destroyed some businesses and was a huge tailwind to profitability for others.

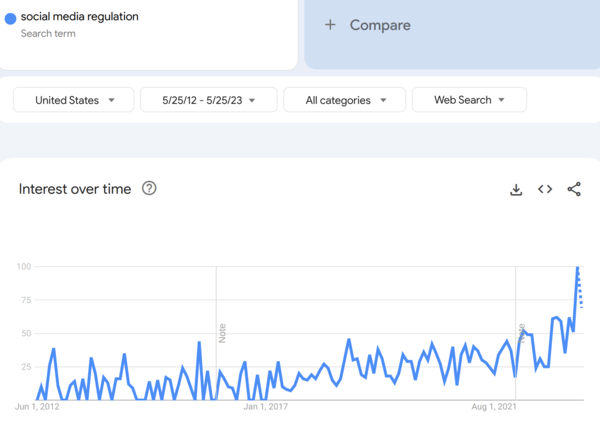

Now consider that Google is only 25 years old, Facebook is only 19 years old, TikTok is only 7 years old, and ChatGPT is less than 6 months old. Tech is still a baby industry in its wild west phase, but the keen observer will have noted a growing number of seemingly unrelated events which are all nevertheless pushing towards the same outcome: substantial legislative reform & new regulations for the U.S. tech industry. Those seemingly unrelated events are:

- An FCC proposal to ban the resale of personal data with multiple third parties (Feb 2023).

- Civil rights lobbying groups agreeing with the Republican version of the American Data Privacy and Protection Act proposed in 2022.

- The DOJ’s antitrust lawsuit against Google for monopolizing digital advertising markets (Jan 2023).

- The EU’s record-breaking data privacy violation fine of $1.3 billion against Meta (May 2023).

- Elon Musk’s open letter to pause development of GPT-5 (Mar 2023).

- A bipartisan group of 20 senators sponsoring the EARN IT Act bill which would make section 230 protection contingent on tech companies taking aggressive action to stop the spread of certain content harmful to children (Apr 2023).

- The FTC’s attempt to block Microsoft’s acquisition of Activision as anticompetitive (Apr 2023).

- The class action lawsuits against GitHub, StabilityAI, and Midjourney for intellectual property infringement (Nov 2022 & Jan 2023).

- The U.S. attempt to ban TikTok for reasons of national security and child safety (ongoing).

- The recent Senate hearing on AI regulation with Sam Altman (May 2023).

Those events reflect four core trends that are going to define how tech gets regulated starting (most likely) within the next 3 years:

- Growing consensus and concern over the negative effects of social media on kids.

- Deglobalization and the nationalization of tech supply chains & data that it leads to.

- The rapidly growing risks of and legal disputes over AI.

- An explosion of scams and spam.

In the rest of this article, I’m going to break down each of those trends and the types of new laws and regulations that each is likely to lead to in the U.S.

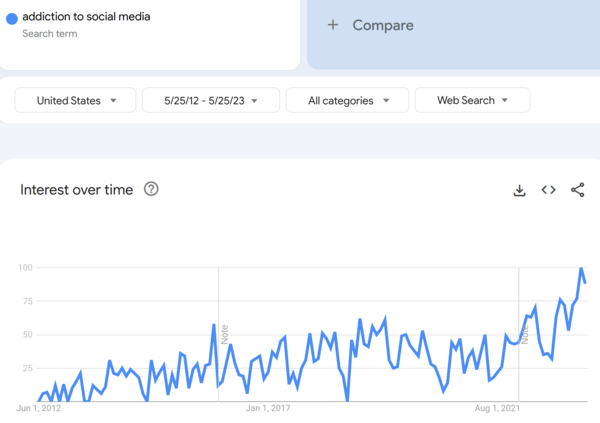

Trend 1: Growing consensus and concern over the negative effects of social media on kids

In 2011, a report by the American Academy of Pediatrics (AAP) described a phenomenon the authors termed “Facebook depression” which was correlated with preteens and teens spending large amounts of time on social media.

Over the next decade, thousands of scientific papers were published on the topic of social media’s effects on kids and young adults. The issues of highest concern included:

- Social media addiction: A compulsion to use & think about social media so much that it interfered with school performance and the ability to form real world friendships.

- Social comparison orientation (SCO): A tendency of individuals to engage more in social comparisons, which affect the way that those individuals make decisions in a way that reduces their enjoyment of life.

- Depression. Rude comments, getting fewer likes on your posts than other people, and algorithmic feedback loops that emerge from engagement with negative content can all contribute to depression in both kids and adults. (NOTE: AI chatbot companies also have an incentive to engage people, and we have already seen this lead to depression and suicide.)

- Cyberstalking (which is already criminalized under anti-stalking, slander, and harassment laws).

- Cyber-bullying.

- Digital sexual exploitation of kids by adults posing as kids or trustworthy figures online.

The federal government is very likely to pass legislation to address some or all of these issues in the near future. Some types of regulations that are already being discussed include:

- Child data limits. Prohibitions or restrictions on the collection and/or monetization of data collected from child users of a tech platform.

- Government ID-based or device-based age verification online (as opposed to the simple “click to verify that you are at least 13 years old” popups that some websites use today). If that seems outrageous to you, then just remember that drivers licenses seemed just as outrageous of an idea in 1900, 3 years before the first state implemented a license requirement.

- Child recommendation rules. Prohibitions on which types of content can be algorithmically recommended to kids (there is precedent for this in how Congress and the FCC have limited the types of content which can be broadcast on local TV channels).

- GDPR-like rights to opt out of data collection or to “be forgotten” by a tech company.

- A private right of action (meaning citizens can sue tech companies directly for violating their privacy instead of waiting for the government to do so).

- Elimination of section 230 protections in situations where data privacy rights have been violated.

- A “duty of care”. Some senators want to create a legal “duty of care” that would make tech companies liable if they didn’t take “reasonable” precautions to protect user privacy. Analogy: Just as company executives have a fiduciary duty to their shareholders that goes beyond simply not committing fraud, a duty of care would create a duty of executives to their customers that goes beyond simply not collecting data they aren’t supposed to.

“We must finally hold social media companies accountable for experimenting [on our children] for profit. It’s time to pass bipartisan legislation to stop big tech from collecting personal data on our kids and teenagers online!”

President Biden’s State of the Union speech on Feb 7, 2023

For those remarks, Biden received a bipartisan standing ovation from Congress (shown below).

Trend 2: Deglobalization

Find the common thread among the following events (all of which happened within the last 18 months):

- Russia’s invasion of Ukraine in 2022.

- U.S. and European sanctions on Russia in response to its invasion.

- China’s increasing frequency of military incursions into Taiwanese airspace.

- The Biden administration’s bans on new telecom equipment from Huawei and 4 other Chinese companies, on the grounds of national security concerns.

- The U.S. customs crackdown on the import of Chinese goods manufactured with forced labor.

- China’s criminalization of certain business data gathering activities by foreign companies. And subsequently, China’s raids on foreign consulting firms.

- The still implausible but increasingly serious discussions of a BRICS (Brazil Russia India China South Africa) common currency.

- The precipitous drop off of foreign direct investments into China (which in the second half of 2022 hit an 18 year low).

- The U.S.’s ongoing attempt to ban TikTok.

- China’s ban of U.S. Micron computer chips from its infrastructure projects.

- Florida’s ban (by Governor Ron DeSantis, a top contender for U.S. President in 2024) on Chinese nationals being able to purchase property in large swaths of the state.

If you said “rising geopolitical tensions”, you’d be correct! Countries are butting heads more frequently. That’s partly because authoritarian governments like those of China and Russia have grown more powerful over the last few decades and are now trying to expand their international control. It’s also partly because globalization over the past few decades has harmed the working class in places like the U.S. and U.K., and that has created populist nationalism movements.

As a result, international distrust is the highest it’s been in 30 years, and the effects on the tech industry are already happening, with more soon to come. Countries don’t want their supply chains, money, or data security to be reliant on other countries. That’s why the U.S. is trying to onshore its computer chip industry and ban TikTok. And distrust of America’s government surveillance programs is also why the EU just ordered Meta to stop transferring all data on EU citizens to the U.S. In fact, the prime cause of Meta’s current predicament was Ed Snowden’s disclosure of the fact that the U.S. government was, without proper cause, searching through the private data of European citizens.

The regulations being discussed in the U.S. to address these issues include:

- Banning TikTok.

- Creating a federal data privacy law that will ensure U.S. companies aren’t cut off from the data flows of allied countries such as those in the EU. In fact, Biden already issued an executive order last year directing Congress to do this, but they have yet to agree on how.

The second option would actually solve the TikTok concerns too, and would do so better than a TikTok ban. That’s because in the absence of U.S. data privacy rules that apply to ALL companies (not just Chinese ones), even if TikTok is banned, the Chinese government could just buy Americans’ location data from data brokers that in turn get data from American tech companies like Facebook and Google.

REMARK: For some reason, mainstream media companies often use the term “data broker”, but I have not once heard them mention the actual names of data brokerage companies. Some of the biggest data brokers are Acxiom, Epsilon Data Management, LexisNexis, Experian, CoreLogic, Equifax, and several Oracle subsidiaries.

Trend 3: The rapidly growing risks of AI

In the 6 months since ChatGPT was launched, we’ve seen multi-billion dollar businesses such as stackoverflow.com and Chegg.com take serious hits to their revenue. We’ve also seen examples of AI kidnapping scams, AI chatbots encouraging suicide, AI chatbots generating defamatory statements that affect people’s careers, AI’s being trained on copyrighted materials without permission, AI’s being trained on personal data, and AI’s giving inappropriate responses to kids. And of course we also have prominent Silicon Valley figures like Elon Musk and Sam Altman who are actively petitioning the federal government for AI regulation.

On the intellectual property side of things, this has already led to multiple class action lawsuits on behalf of copyright holders whose works were used to train AI systems without receiving any compensation. We also have senators from Tennessee and Minnesota who are advocating for copyright reform to solve this issue and a specific Senate hearing scheduled on this issue.

On the safety side of things, the EU has already begun drafting new AI legislation.

In the U.S., the proposed AI regulations that have the most momentum are:

- “Nutrition Labels” for AI models. These would be required consumer disclosures about what data a model was trained on and how it scores on various bias benchmark tests.

- FDA-like Clinical Trials. AI models would be subject to clinical trial-like testing before they could be put into production. Third-party scientists would participate in auditing the AI models to ensure safety standards were met.

- A federal right-of-action. This would be a statute that goes beyond simply clarifying that section 230 doesn’t apply to generative AI companies. It would also preempt states and ensure that anyone who was harmed by a generative AI company could sue that company in a federal court.

- Copyright Rules for AI. Copyrighted works could not be used to train generative AI models without the copyright holder’s permission. This could be achieved through an amendment to the Digital Millennium Copyright Act (DMCA) of 1998 which itself created this problem by allowing tech companies to copy copyrighted works for certain types of data processing.

- A new AI regulatory agency. This agency would require licensure of any AI models above a certain scale of computational power, number of users, and/or abilities. During a recent hearing, multiple senators compared this to how nuclear reactors or pharmaceutical drugs require licensure.

Trend 4: An explosion of scams and spam

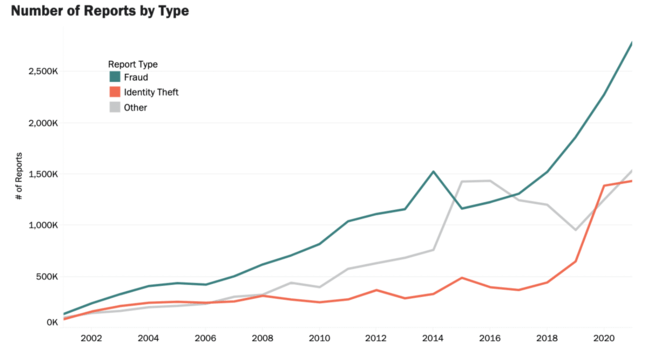

The graph below shows the number of FTC identity theft, fraud, and spam reports over the last two decades. The total number of reports grew from just 330,000 in 2001 to 5.74 million in 2021.

Email spam, text spam, social media comment and DM spam — they are all getting worse, not better.

Personally, I spend at least 10 minutes per day filtering & deleting unwanted emails. Multiplied by 365 days per year and 258 million adult Americans, that adds up to 15.7 billion man-hours of life wasted every year. For reference, it only took 22 million (with an M not a B) man-hours to construct the Burj Khalifa (the tallest building in the world). In other words, in the amount of time Americans spend dealing with spam emails, we could instead build 713 Burj Khalifa towers every year.

The primal cause of all this spam is the unregulated sale and resale of personal data collected by companies like Facebook, Google, and Verizon. You click “accept” on the terms of service of one website and eventually your data ends up in a dozen different databases used by thousands of different marketers.

There are several possible regulatory solutions to this that are being considered in the U.S.

- Data sale restrictions. Earlier this year, the FCC proposed a rule to ban the sale of data collected from lead gen forms to multiple third parties.

- A right to be forgotten. Congress is considering passing federal legislation that would provide Americans with a GDPR-like “right to be forgotten” by tech companies and data brokers.

- More liability for tech companies. Some members of Congress have discussed removing Section 230 liability protections for tech companies that fail to adhere to industry best practices to enforce the existing CAN-SPAM law.

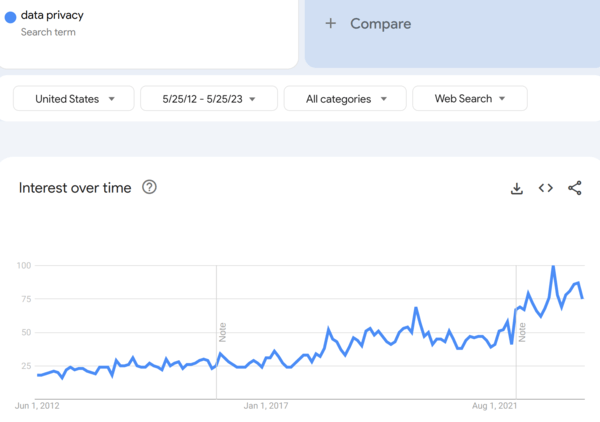

The intersection of social media, deglobalization, AI, and spam = data privacy. Federal U.S. data privacy regulations are both inevitable & imminent.

The intersection of the 4 trends we just discussed–social media harms, deglobalization, AI risks, and a proliferation of spam–is a lack of data privacy.

Data privacy refers to the right of individuals to control their personal information, including where their data may be stored as well as who can access it and for what purposes (apologies to my computer scientist readers who cringe at that definition). Data privacy matters because of what happens when you DON’T have it:

- Bad incentives exist. Tech and telecom companies are incentivized to collect every piece of data they can about every consumer, and that puts consumers at higher risk of being scammed, defrauded, or victimized by identity theft.

- Destruction of the fourth amendment. The fourth amendment to the U.S. Constitution guarantees that U.S. citizens will not be subject to searches without a warrant. However, many government agencies are already bypassing this by simply purchasing the personal data that they need from data brokers. This is legal and currently happening under existing U.S. law.

- National security risk. China is just as capable of purchasing data from data brokers as is the U.S. government. That’s why a TikTok ban without an accompanying limitation on the resale of data from American companies would be ineffective.

- Competition is reduced because a tech company doesn’t have to allow a user to export their data, which means that the cost of switching from one software to a competitor software can be quite high.

Currently, the U.S. has very weak data privacy laws.

“There is a fundamental conflict of law between the US government’s rules on access to data and European privacy rights, which policymakers are expected to resolve in the summer.”

Meta’s response to their record-breaking $1.3B data privacy violation fine

My Predictions

- Within 3 years (and possibly as soon as this year), Congress will pass federal data privacy legislation. This legislation will:

- Give U.S. citizens the right to demand tech companies delete any data they have collected from them.

- Give parents the right to demand social media companies remove any sexually explicit materials of their kids which were posted on their platform.

- Give EU citizens legal recourse if their data is inappropriately given by a U.S. tech company to the U.S. government (Biden already issued an executive order requiring Congress to pass such a law, and Meta’s $1.3 billion privacy fine ruling is further incentive for the U.S. government to pass such a law).

- Eliminate section 230 liability protection for tech companies in situations where privacy laws are violated.

- Create a private right of action for U.S. citizens to sue tech companies if those companies violate their privacy rights provided by this new law.

- Require that social media companies offer parental controls that allow parents to set limits on how long their kids can use any particular social media app.

- The Digital Millenium Copyright Act will be amended within 10 years to remove the allowance of tech companies to copy materials for processing if such processing is for the purpose of training a generative AI model whose output could reduce the market for the author’s original work.

- Within 10 years, Congress will pass a federal law that requires some sort of disclosure about the training data and/or biases of recommendation algorithms and generative AI models for companies with more than a minimum number of U.S. users (probably 1-10 million).

- Within 30 years, Congress will create a new federal agency to create and enforce safety standards for tech companies, including social media companies and AI companies, over a certain size.

P.S. (Completely unrelated): Nvidia’s stock reached a $1 trillion market cap yesterday. It’s a big enough bubble that I finally opened a bearish option position to monetize the bubble’s eventual pop. I’d be happy to bet an expensive dinner on RSP outperforming NVDA over the next 5 years if you think Nvidia’s valuation is justified.

Also if you enjoyed this article, you can subscribe to my newsletter (for free!) to get more like it: The Axiom Alpha Letter

Appendix A: Timeline of Automotive Regulation

- 1908 – The first production Model T is built.

- 1910 – New York is the first state to outlaw drunk driving.

- 1920 – The first 4-way intersection, 3-color (red/yellow/green) traffic light is created and erected (by a Detroit police officer).

- 1956 – Ford begins offering seat belts as an optional safety feature that customers could upgrade to.

- 1958 – Congress passes the Automobile Information Disclosure Act which requires all new automobiles to carry a sticker on the window (Monroney label) containing important information about the vehicle including its MSRP, engine and transmission specs, standard equipment, and warranty details.

- 1959 – This is the first year when all states and D.C. have implemented laws requiring exam-based drivers’ licenses in order to operate an automobile.

- 1965 – Activist Ralph Nader publishes the book “Unsafe at Any Speed” which crystallizes what had been several years of gradually souring attitude of the public towards the increasing numbers of deaths and injuries from car accidents. The book sparks a national debate and motivates Congress to pass laws the next year in order to regulate the automobile industry.

- 1966 – Congress creates the U.S. Department of Transportation and passes the National Traffic and Motor Vehicle Safety Act which authorizes the creation of the Federal Motor Vehicle Safety Standards (FMVSS)

- 1967 – The first FMVSS goes into effect, requiring auto makers to include seat belts as standard equipment.

- 1968 – The other FMVSS become effective on January 1st. The new requirements include things like padded dashboards, labeled controls, twin-circuit brakes, two-speed wipers that covered a minimum percentage of windscreen area, a left-hand outside mirror, four-way hazard flasher lights, an energy-absorbing steering column, front and rear side marker lights or reflectors, windscreesn with thicker interlayer safety glass, and a requirement that all vehicles pass a 30 mph crash test into a concrete barrier while demonstrating survivability of standardized test dummies in the front seats.

- 1970 – Congress passes the Highway Safety Act of 1970 which establishes the National Highway Traffic Safety Administration (NHTSA) which is charged with creating and enforcing FMVSS.

- 1973 – The NHTSA requires new cars to pass side-impact safety tests.

- 1974 – Congress passes a law that established a national maximum speed limit of 55 miles per hour. States had to agree if they wanted federal funding for highway repair. This was partly motivated by safety concerns, but it was also motivated by a desire to reduce America’s fuel consumption in the face of the OAPEC oil embargo from October 1973 to March 1974, during which time the price of oil globally had roughly quadrupled.

- 1982 – A study found that 83% of drivers on New York interstate highways violated the speed limit, despite extreme penalties such a $100 fine (equivalent to $314 today) or 30 days in jail for a first offense.

- 1984 – New York state passes the first law requiring seat belt use in passenger cars.

- 1985 – 24 states (including NY and CA) have outlawed drunk driving, but 26 (including FL) have not.

- 1988 – A study found that 85% of drivers on Connecticut’s rural interstates violated the 55mph speed limit.

- 1991 – Congress passes the Intermodal Surface Transportation Efficiency Act (ISTEA) which mandates that passenger automobiles and light trucks must include airbags for the driver and front passenger starting in 1998.

- 1995 – 21 years after its creation, the national maximum speed limit law is repealed by Congress, and full speed limit-setting authority is returned to the individual states.

Appendix B: How Much Did GDPR Cost?

Forbes reported that Fortune 500 companies spent $7.8 billion preparing for the roll-out of GDP. However, that leaves out the majority of U.S. companies that were subject to GDPR.

Researchers at the Oxford Martin School estimated that GDPR cost affected businesses an 8.1% decline in profit and a 2.2% drop in sales, on average, with larger declines in smaller companies.

And in addition to those steep costs for compliance and lost sales, companies were still fined. In the 5 years since GDPR took effect in 2018, Meta alone has been fined more than $2 billion, Amazon has been fined close to $1 billion, and roughly 1,000 penalties have been issued against small to medium companies.

Appendix C: Schrems 2020 Court Case Explained

In 2020, the Court of Justice of the European Union (CJEU) ruled in the case “Data Protection Commission v. Facebook Ireland, Schrems” that the European Commission’s adequacy decision for the EU-U.S. Privacy Shield Framework, upon which more than 5000 U.S. companies rely to conduct trans-Atlantic trade in compliance with GDPR, was invalid. In particular, the CJEU ruled that the nature of U.S. government access to private sector data was not limited to what is strictly necessary and proportional as required by EU law and hence do not meet the requirements of Article 52 of the EU Charter on Fundamental Rights. Additionally, the CJEU determined that with regard to U.S. government surveillance, EU data subjects lack actionable judicial redress and therefore do not have a right to an effective remedy in the U.S., as required by Article 47 of the EU Charter.