Last week, Biden announced a new set of AI safety rules that will apply to seven of the largest AI companies: Amazon, Google, Meta, Microsoft, OpenAI, Anthropic, and Inflection. The new rules require AI-generated content to be watermarked, require AI systems be subjected to cybersecurity and capability testing before release to the general public, and require AI systems be made available to external vulnerability auditers.

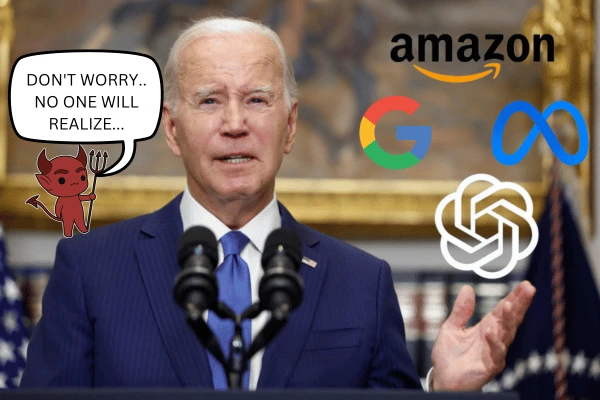

However, the announcement is pure theater. The new rules are not regulations or laws. They are just voluntary commitments by the tech companies involved — voluntary commitments to safety measures that the companies have already implemented or are working on implementing. The voluntary deal accomplishes nothing that wasn’t already going to happen, despite Biden emphasizing in a press statement that the commitments were “real”.

In fact, pushing this misleading narrative that AI companies are adopting new safety rules may actually lower the probability that AI safety legislation is passed in the U.S. anytime soon. However, when AI safety legislation is eventually passed in the U.S., and make no mistake, it will be, it will likely include provisions similar to the requirements in this voluntary deal. Why do I say that? Because it’s what happened to the car industry 80 years ago.

References

[1] WSJ: White House says Amazon, Google, Meta, and Microsoft agree to AI Safeguards