Large language models (LLMs) are the core technology behind ChatGPT and Bard. They are also an amazing opportunity for aspiring entrepreneurs to build very sophisticated software apps. In this article, I describe 8 powerful tools which software developers can use to build apps with LLMs.

1. OpenAI API

OpenAI has an API that allows developers to access GPT-4 and other LLMs created by OpenAI. This API is at the heart of many AI apps. The pricing for the API with each LLM model is summarized in the table below.

| Model | Pricing |

| GPT-4 (with 8K context) | $0.03 / 1K tokens (prompts) $0.06 / 1K tokens (completions) |

| GPT-4 (with 32K context) | $0.06 / 1K tokens (prompts) $0.12 / 1K tokens (completions) |

| Chat (gpt-3.5-turbo) | $0.002 / 1K tokens |

| InstructGPT (Ada) | $0.0004 / 1K tokens |

| InstructGPT (Babbage) | $0.0005 / 1K tokens |

| InstructGPT (Curie) | $0.002 / 1K tokens |

| InstructGPT (Davinci) | $0.02 / 1K tokens |

You can also create your own custom models by fining tuning some of OpenAI’s base LLMs. There is token-based pricing for both the training of fine-tuned models and your subsequent usage of those models.

| Model | Training Cost | Usage Cost |

| Ada | $0.0004 / 1K tokens | $0.0016 / 1K tokens |

| Babbage | $0.0006 / 1K tokens | $0.0024 / 1K tokens |

| Curie | $0.003 / 1K tokens | $0.012 / 1K tokens |

| Davinci | $0.03 / 1K tokens | $0.12 / 1K tokens |

OpenAI also has APIs which complement its LLM APIs by offering text embedding and text-to-image capabilities. You can find the pricing for those APIs here.

2. LangChain

LangChain is an open-source Python framework for developing LLM-based apps. In particular, LangChain allows you to “chain” together LLMs, user prompts, and third-party apps.

[3] LangChain Python Repo on Github

[4] LangChain Javascript Repo on Github

3. Steamship

Steamship is basically Heroku for LLM apps. You can host managed LangChain apps in seconds.

4. Dolly 2.0

Dolly 2.0 is an open-source (even for commercial use) LLM designed for ChatGPT-like human interactivity. You can use this as a replacement for OpenAI’s GPT API if you want more control over the functionality and cost of your app. The tradeoff is slightly lower model capability and slightly higher operational complexity to get it set up and running.

[2] Databricks: Free Dolly: Introducing the world’s first truly open instruction-tuned LLM

5. Pinecone

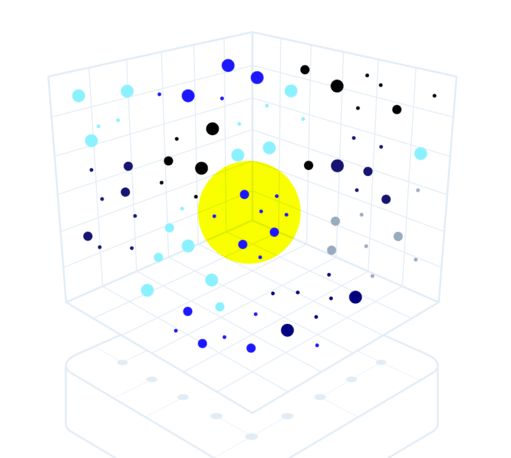

Pinecone is a vector database built for high-performance vector search applications. It is a very good way to store vector embeddings of text, images, and/or video data. One useful application of such a database is to give an LLM the ability to perform a semantic search over a particular dataset (e.g. a user’s cloud drive, a set of company documents, a set of pictures, etc).

[1] LangChain documentation for using Pinecone

6. Hugging Face Hub

Hugging Face Hub is a platform where users can share datasets and pre-trained AI models. It is somewhat like GitHub in terms of code-sharing and collaboration features.

Hugging Face Hub also includes Hugging Face Spaces which is a hosted service where users can build and deploy web-based demos of AI apps using Gradio or Streamlit.

7. DeepSpeed Chat

DeepSpeed Chat (DS-Chat) is a Microsoft service which takes a pre-trained Huggingface model and runs it through the 3 steps of InstructGPT-style RLHF (Reinforcement Learning with Human Feedback) training necessary to produce a model that interacts with humans like ChatGPT. DS-Chat is a significantly (perhaps 15x) cheaper way to perform this type of training than prior methods.

[1] Microsoft blog post announcing DeepSpeed Chat

8. Eleven Labs

Eleven Labs allows you to create voice replicas of real people (such as yourself). You can use this to transform the textual output of an LLM into audio output of an app.